Last Week in AI #250: Microsoft's Phi-2, Mistral's Mixtral 8x7b, efficient sequence with Mamba, $24 Deepfakes disrupting Bangladesh's election, and more!

The small 2.7B Phi-2 model is surprisingly strong, Mistral releases MoE model, Mamba may replace transformers for better performance, cheap deepfakes ran amok in election campaigns

Top News

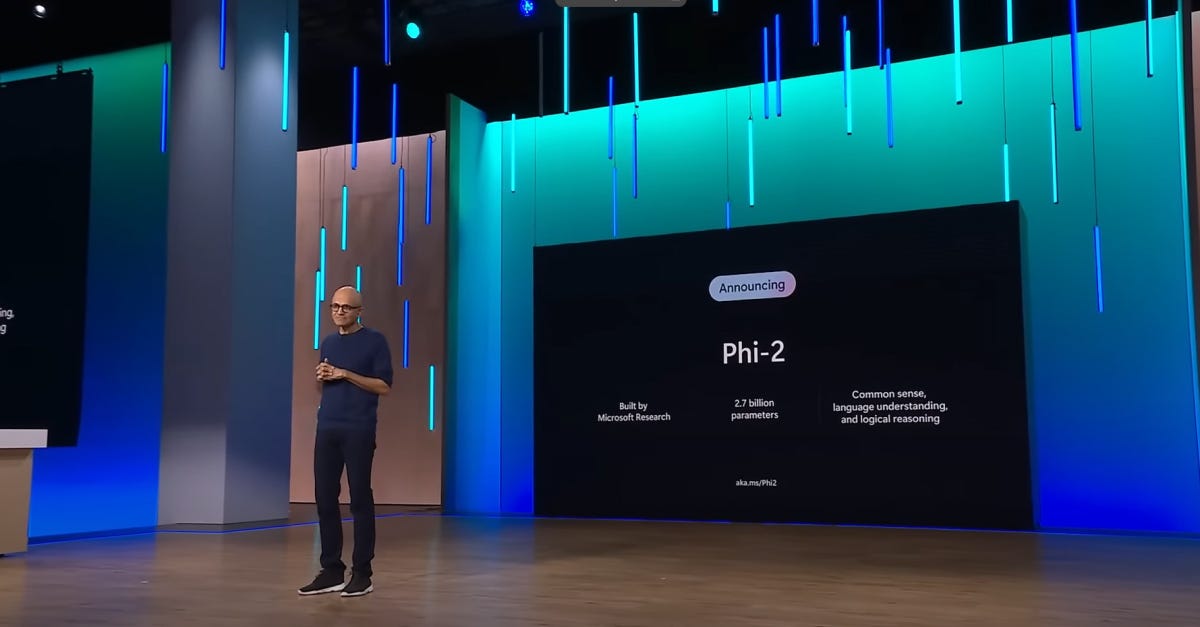

Phi-2: The surprising power of small language models

Microsoft's Machine Learning Foundations team has developed a new small language model (SLM) named Phi-2, which has demonstrated impressive reasoning and language understanding capabilities. Despite its smaller size of 2.7 billion parameters, Phi-2 has shown performance equivalent to or better …