Last Week in AI #321 - Anthropic & Midjourney Lawsuits, Bad Jobs Data

Judge puts Anthropic’s $1.5 billion book piracy settlement on hold, Warner Bros. Joins Studios’ AI Copyright Battle Against Midjourney, Anthropic endorses California’s AI safety bill SB 53

Top News

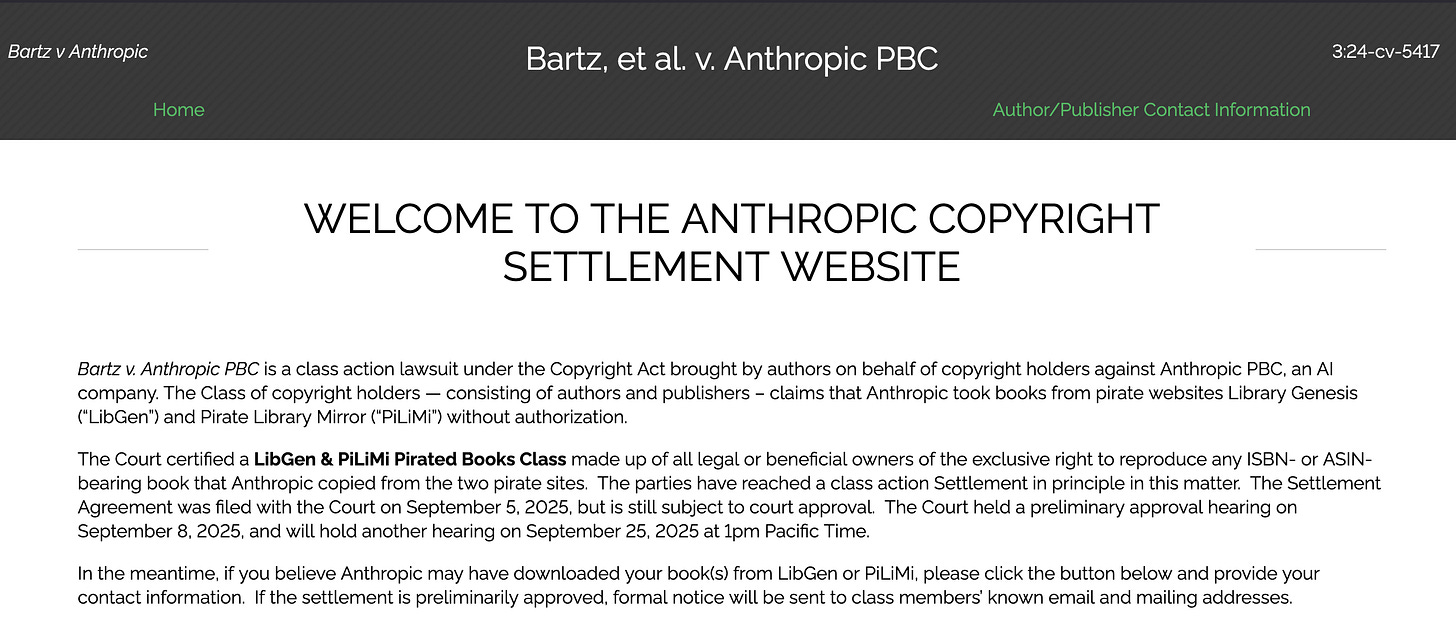

Judge puts Anthropic’s $1.5 billion book piracy settlement on hold

A federal judge has paused Anthropic’s proposed $1.5 billion class-action settlement with U.S. authors, citing concerns the deal was crafted “behind closed doors” and could be forced “down the throats of authors.” Judge William Alsup, who previously ruled that training on purchase…