Last Week in AI #327 - Gemini 3, Opus 4.5, Nano Banana Pro, GPT-5.1-Codex-Max

It's a big week! Lots of exciting releases, plus nvidia earnings and a whole bunch of cool research.

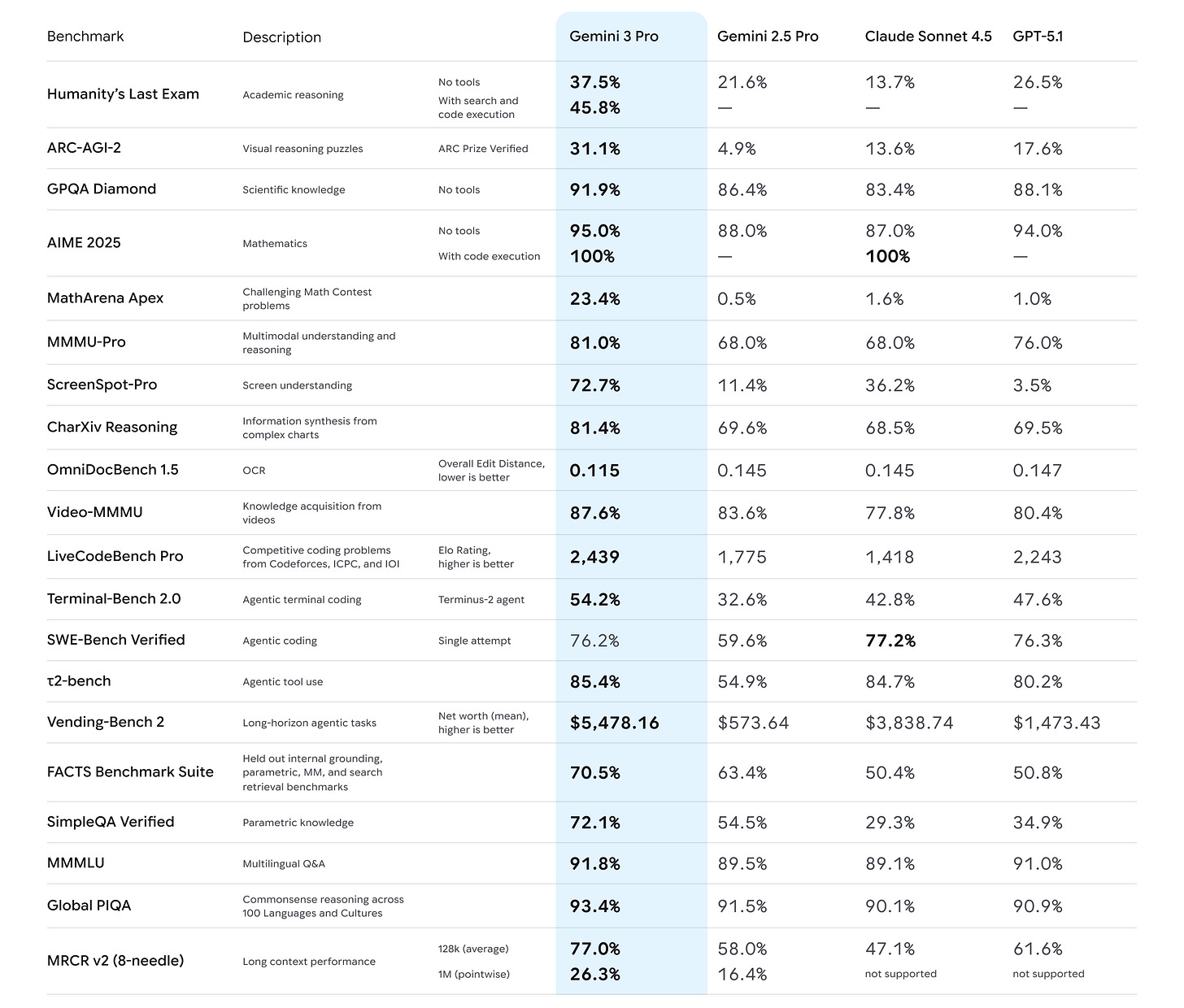

Google launches Gemini 3 with new coding app and record benchmark scores

Related:

Google unveiled Gemini 3, its most capable foundation model to date, now live in the Gemini app and AI Search, with a research-tier Gemini 3 Deepthink …