Last Week in AI #329 - GPT 5.2, GenAI.mil, Disney in Sora

GPT-5.2 is OpenAI’s latest move in the agentic AI battle, Google is powering a new US military AI platform, Trump Moves to Stop States From Regulating AI

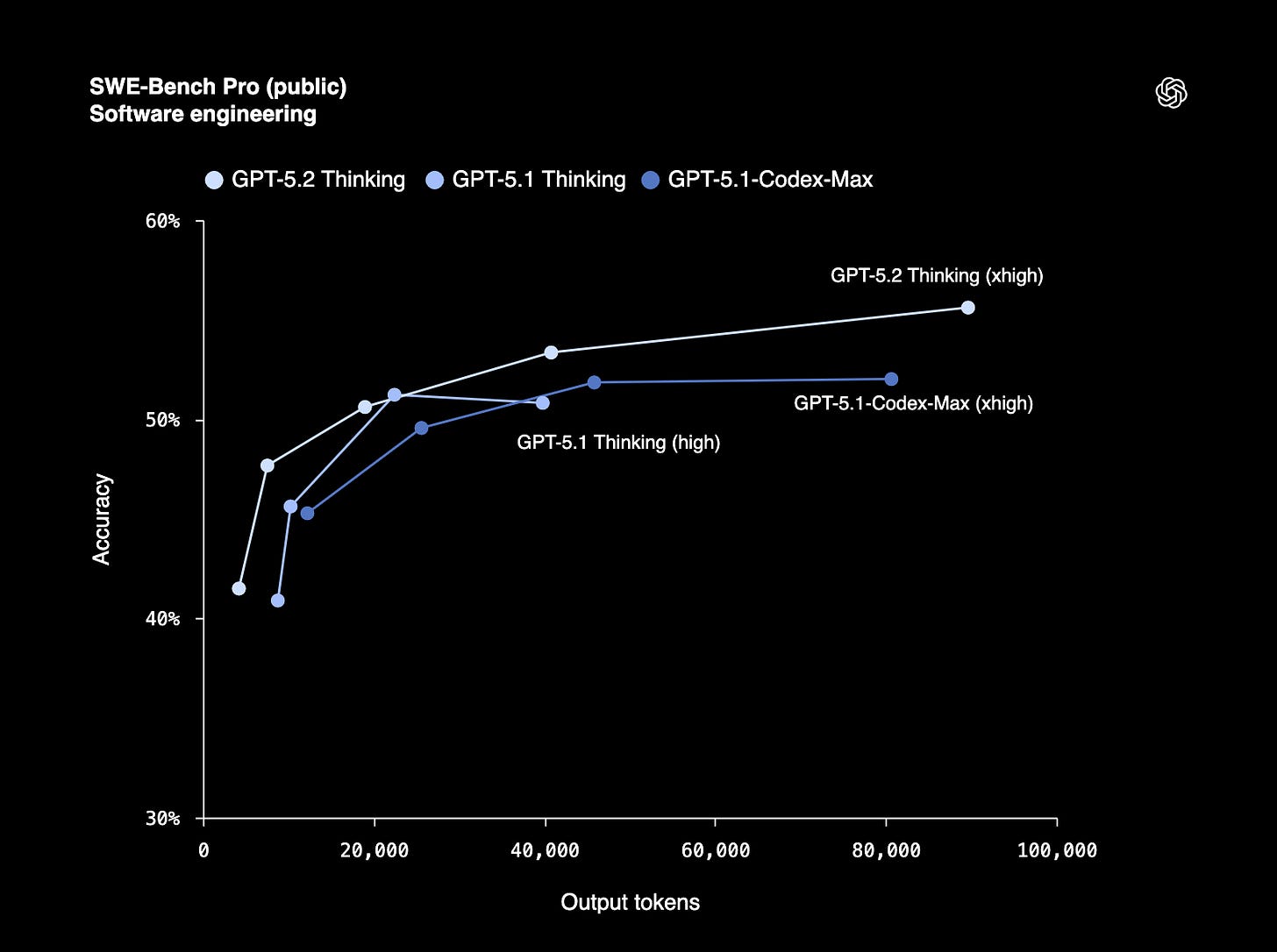

GPT-5.2 is OpenAI’s latest move in the agentic AI battle

Related:

OpenAI released the GPT-5.2 model series—Instant, Thinking, and Pro—positioned as its best for everyday professional use, with upgrades in spreadsheet and presentation creation, coding, image perception, long-context understanding, tool use,…