Last Week in AI #331 - Nvidia announcements, Grok bikini prompts, RAISE Act

Nvidia Details New A.I. Chips and Autonomous Car Project, Grok is undressing anyone, NY passes AI regulation

Nvidia Details New A.I. Chips and Autonomous Car Project With Mercedes

Related:

Nvidia launches Alpamayo, open AI models that allow autonomous vehicles to ‘think like a human’

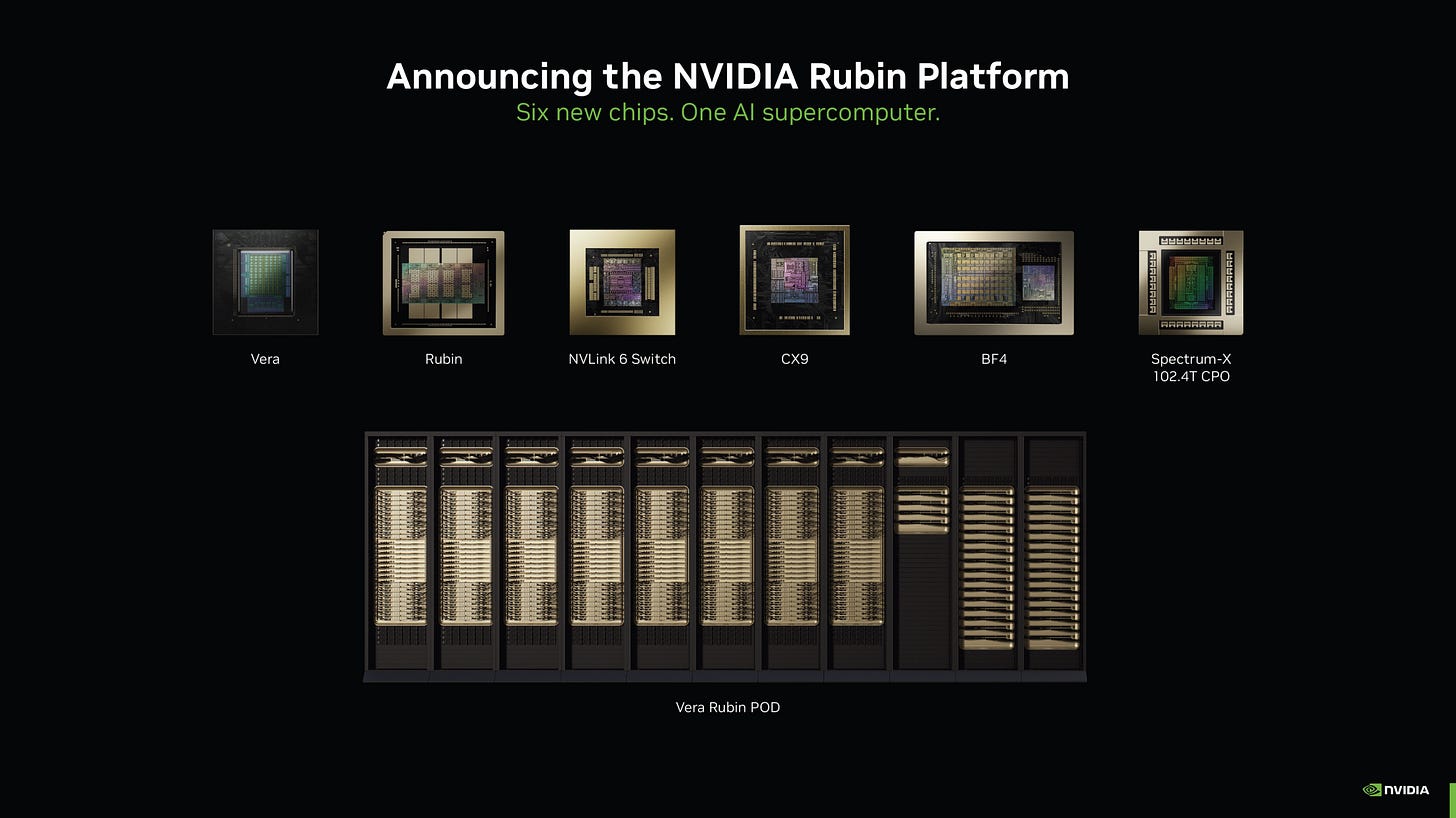

Nvidia launches Vera Rubin AI computing platform at CES 2026

At CES 2026, Nvidia CEO Jensen Huang announced the company’s new AI chip, Vera Rubin, which will begin shipping to custome…