A special non-news episode in which Andrey and Jeremie discussion AI X-Risk!

Please let us know if you'd like use to record more of this sort of thing by emailing contact@lastweekin.ai or commenting whether you listen.

Subscribe

Outline:

(00:00) Intro

(03:55) Topic overview

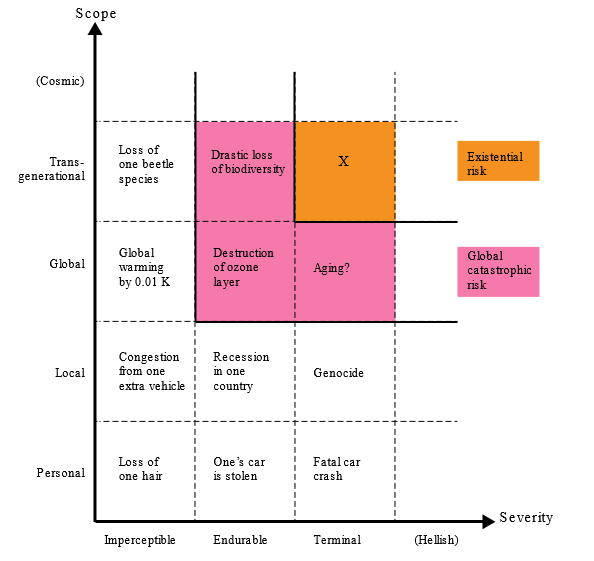

(10:22) Definitions of terms

(35:25) AI X-Risk scenarios

(41:00) Pathways to Extinction

(52:48) Relevant assumptions

(58:45) Our positions on AI X-Risk

(01:08:10) General Debate

(01:31:25) Positive/Negative transfer

(01:37:40) X-Risk within 5 years

(01:46:50) Can we control an AGI

(01:55:22) AI Safety Aesthetics

(02:00:53) Recap

(02:02:20) Outer vs inner alignment

(02:06:45) AI safety and policy today

(02:15:35) Outro

Links